Logan & Friends

| 2024

Delivered near real-time instructional coaching and cut feedback navigation time 40% without letting AI override human judgment

Impact

Efficiency rate

100%

Coaching Feedback Moved From Delayed → Near Real-Time

Eliminated multi-day feedback delays, enabling teachers to reflect while lessons were still fresh.

User satisfaction

90%

90% educator satisfaction with clarity and speed

Trust increased when AI insights were contextual, optional, and reviewed by a human coach.

Navigation time

-40%

Reduced feedback navigation time by 40% (6 min → 3.5 min)

Teachers spent less time searching and more time reflecting and improving potentially.

Context

Logan & Friends is an education company that partners with schools to design equity-focused, real-world learning experiences. A core part of their work involves instructional coaching, observing classroom teaching and providing feedback to help educators grow.

Instructional coaching works in theory.

In practice, it doesn’t scale well.

Feedback often arrives days or weeks after a lesson, long after its impact has faded.

The client’s vision was ambitious:

to explore whether AI could reduce feedback delays by analyzing voice-recorded teaching sessions, generating standards-aligned insights, and supporting human coaches in delivering faster, more actionable guidance.

However, the scope, technical feasibility, and trust boundaries of using AI in classrooms were unclear.

TL;DR

Instructional coaching doesn’t fail because feedback is bad.

It fails because it arrives too late, without context, and without control.

As one of the product designers on the team, I helped design an AI-assisted coaching system that prioritized timeliness, clarity, and human judgment over automation.

By anchoring AI insights to real classroom moments and tightly constraining when and how AI intervened, we reduced navigation friction, eliminated coaching delays, and preserved trust.

Role

Product Designer

Team

4 Product Designers · Client Designer (PhD) · Subject Matter Experts

Domain

EdTech · Human-Centered AI

Timeline

30 Weeks

Problem

The surface problem was delayed feedback.

The deeper problem was misalignment between how coaching tools are designed and how teachers actually reflect and improve.

What wasn’t working

Feedback often arrived 72+ hours after observation

Guidance felt generic and disconnected from real classroom moments

Teachers struggled to review long, dense feedback within busy schedules

Existing tools added cognitive load instead of reducing it

At the same time, introducing AI added new risks:

Teachers feared being judged or evaluated by algorithms

Recording classrooms raised privacy and consent concerns

Automation risked removing the empathy central to good coaching

The challenge wasn’t whether AI could generate feedback.

It was whether it could do so without breaking trust.

Research

To ground the product in real educator needs, I led and synthesized research across multiple methods.

Research methods

5 in-depth teacher interviews

12 educator survey responses

7 competitor analyses

Review of 20+ research papers on instructional coaching and classroom observation

Multiple rounds of usability testing on early concepts

Key research insights

1

Timeliness matters more than volume

Teachers consistently said feedback loses value when it arrives days later, even if it’s detailed.

2

Context builds

trust

Educators were far more receptive to feedback when it was tied to specific classroom moments they could recall.

3

AI acceptance is conditional

Most teachers were open to AI-assisted feedback only if it didn’t feel judgmental, intrusive, or opaque.

4

Navigation friction kills adoption

Usability testing showed teachers spent excessive time finding relevant feedback, increasing frustration and disengagement.

Empathy and affinity mapping reinforced a clear pattern:

Teachers wanted bite-sized, contextual guidance that respected their time and professional judgment.

The Guiding Principle

AI should surface insight, not deliver judgment.

This principle guided every design decision.

AI’s role: listen, identify patterns, surface moments worth attention

Human coaches’ role: contextualize, interpret, and guide growth

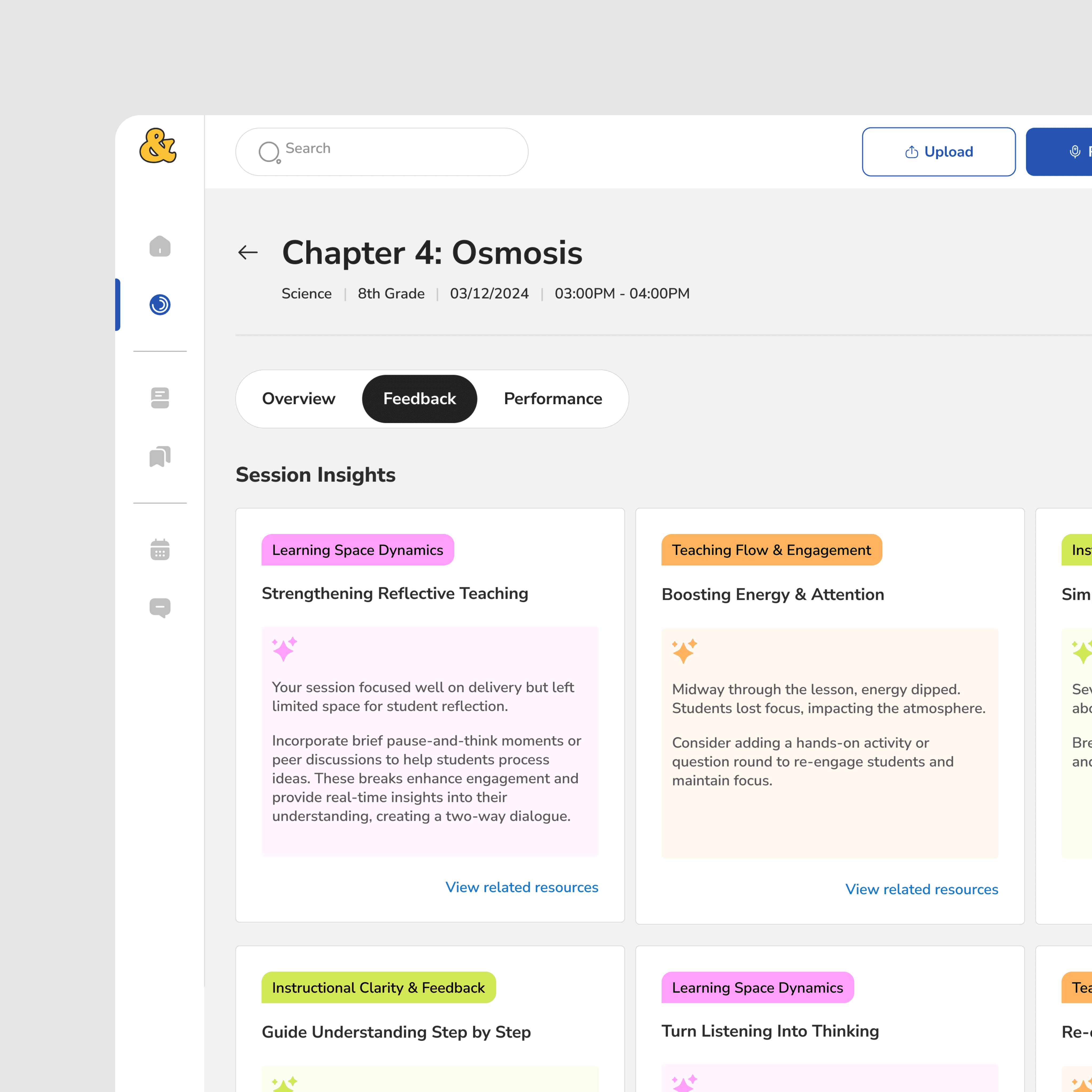

Early Direction: Insight Summaries

I designed the feedback screen to give teachers a clear snapshot of summarized session insights into categorized cards.

Feedback is grouped into clear categories with color-coded tags (1) that highlight framework patterns, with simple, action-focused notes (2), making it feel like guidance rather than evaluation. and a “View related resources” link (3) provides deeper context and help without cluttering the view, keeping feedback approachable and scannable.

What worked

Clear structure

Fast overview

What failed

Teachers couldn’t tell why feedback appeared

No connection to specific classroom moments

Feedback felt generic, the same frustration teachers already had

Decision 1:

Anchor Feedback to Real Classroom Moments

Based on research and testing, I redesigned feedback to be

time-based and contextual.

Switched to a vertical, chronological layout

Added timestamps tied directly to the lesson

Renamed “Session Insights” to "Action Items"

That way, teachers can instantly see what each note refers to, why it was flagged and implement those actions in practice.

Before

After

Anchoring feedback to real moments made AI feel observational, not evaluative.

This was the first major trust unlock.

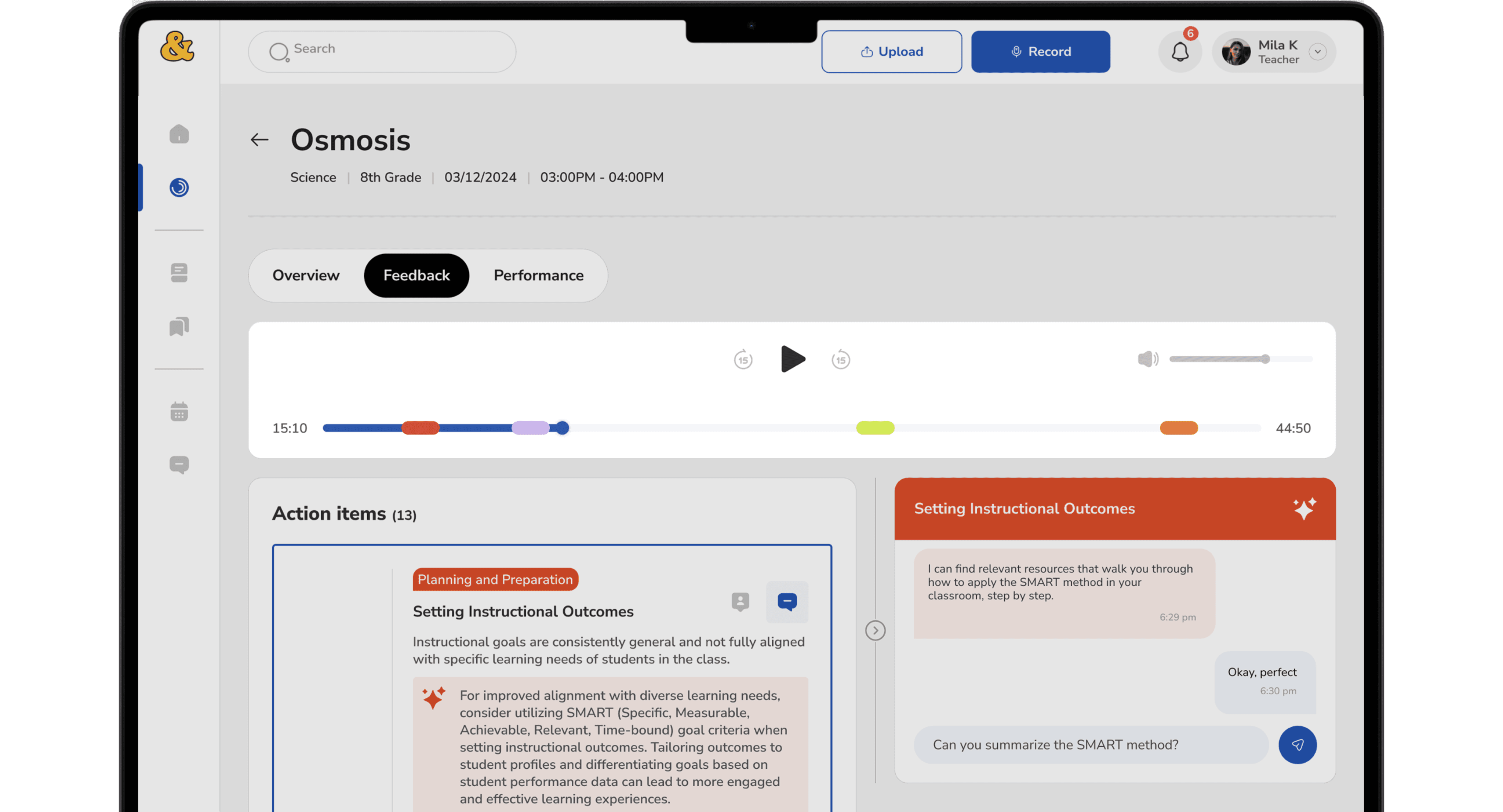

Decision 2

Despite improved context, testing showed teachers spent over 6+ minutes navigating feedback.

So I redesigned the feedback experience to be discoverable, scannable first, deep second.

Added an audio player with timeline markers

Allowed quick jumps to relevant moments

Why it mattered

Teachers are time-poor. If value isn’t easy to find, intelligence doesn’t matter.

Decision 3

Make AI Optional, Contextual, and User-Invoked

Early research showed teachers were open to AI, but only if it didn’t interrupt or judge them.

What changed

AI chat was added as an on-demand assistant

It responded only to questions about selected feedback

It never surfaced unsolicited evaluations or scoring

Why it mattered

AI became a support tool, not an authority. Teachers stayed in control of when, how, and how much they engaged with AI.

This preserved the human core of coaching while still gaining speed.

The Final Experience

The final prototype combined:

Timestamped feedback tied to real classroom moments

Audio playback with visual markers

Clear, scannable feedback cards

Optional AI support for clarification and exploration

Human coach review before delivery

The system felt less like an evaluation tool and more like a coaching partner.

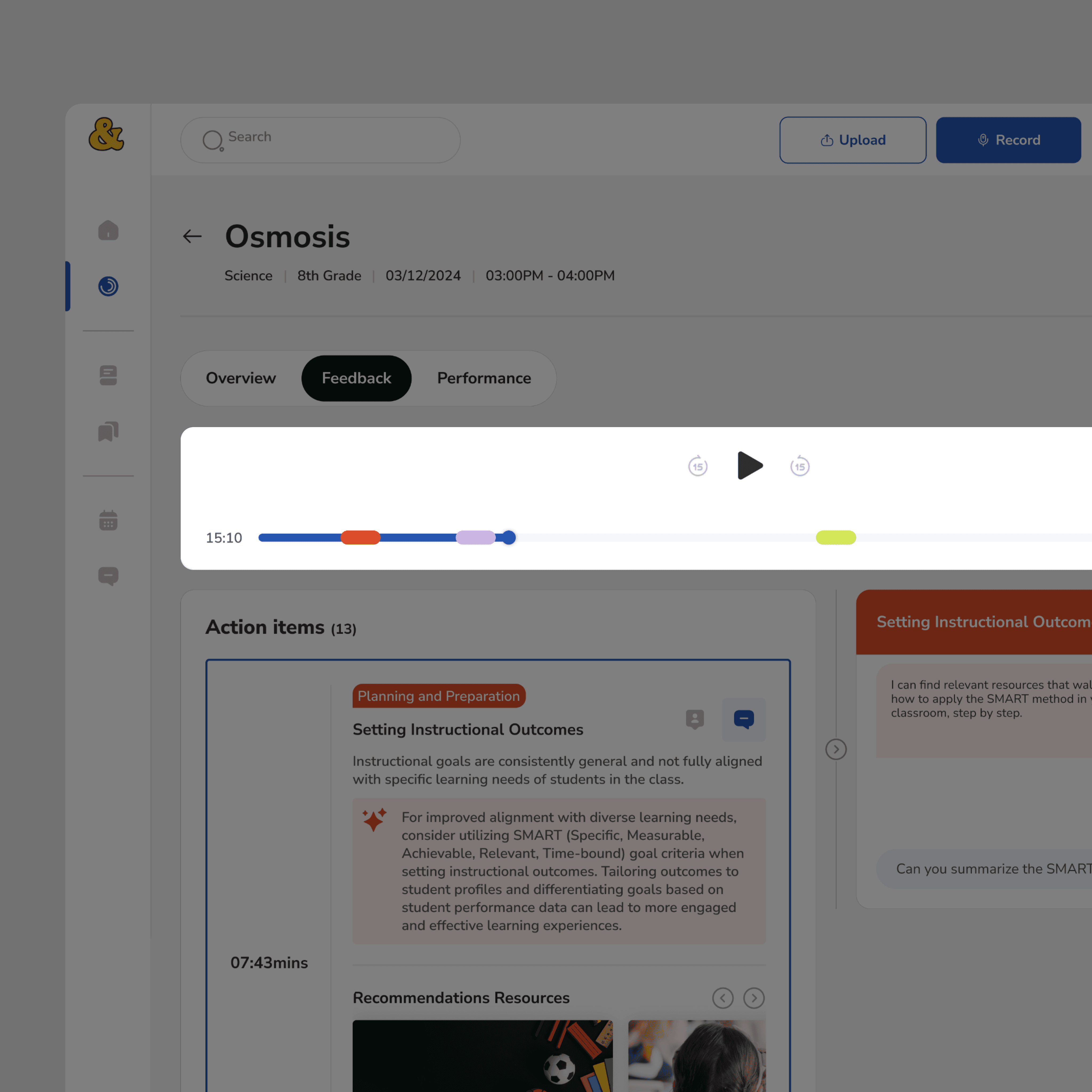

Validation & Testing

We validated clarity, efficiency, and trust through:

Expert reviews with instructional coaches

Think-aloud sessions with experienced teachers, novice teachers, and coaches

Cognitive walkthroughs and design critiques

Post-test satisfaction questionnaires

Key findings and design responses

Issue:

The Audio player remained fixed on top that reduced the usable scroll area, constrained scrolling made longer feedback hard to review.

Before

Fixed:

I reworked the layout to prioritize reading and flow by resizing the existnig containers, expanding the primary scroll area to allow continuous reading and made the audio player sticky.

After

Execution

I led end-to-end design from concept to validation:

Defined information architecture and feedback flows

Designed low- to mid/high-fidelity prototypes

Ran multiple rounds of usability testing

Iterated based on observed behavior, not assumptions

Collaborated closely with designers, a PhD client, and subject matter experts to ensure pedagogical and technical feasibility

Design decisions were continuously tested and refined to balance clarity, trust, and speed.

Impact

Usability testing and prototype validation showed measurable improvements:

40% reduction in navigation time (6 min → 3.5 min)

Near real-time feedback, eliminating multi-day coaching delays

90% of teachers reported satisfaction with clarity and speed

Increased confidence in AI-assisted feedback when paired with human review

Most importantly, teachers described the experience as supportive, not judgmental.

AI Design Trade-offs

We intentionally traded:

Flexibility for trust

Automation for clarity

Intelligence for restraint

AI worked best when it:

stayed close to real moments

avoided abstract scoring

respected human authority

My Learnings

This project reinforced that designing AI systems is as much about restraint as capability.

1

Trust is earned through predictability, not novelty

2

AI adoption depends on timing, context, and control

3

Human judgment must remain visible in high-stakes domains

4

Iteration is how ambiguity becomes clarity

What’s Next

1

Expand coaching insights across multiple sessions

2

Strengthen privacy and consent controls

3

Validate long-term impact on teacher growth

4

Explore real classroom pilots